Using Service Mesh to enhance Communications and Governance of Microservices

Yesterday, the .NET Mafia, C# Mobsters and other Microsoft family community hosted an amazing event related with Microservices. The event had three speakers, and I had the pleasure to be one of the speakers. The first speaker - Ferdinando Aprovitolo - explained how to and all the benefits of using gRPC framework to ensure high performance on the communications of microservices. Then, I explained how you can leverage Service Mesh to enhance communications and governance of microservices. The third speaker - Diego Tondim - finalized the presentations by explaining how you gain insights of your microservices with Elastic APM.

Context

When you are designing your application using a Microservices architecture, the application will be composed set a collection of loosely coupled services. With this architecture design, you gain several benefits, such as:

- increased resiliency - since with a loosely coupled architecture, in case there is a failure of one of the services, it will cause minimal or no impact to other services )

- improved scalability - you can scale individual services, depending on the load, without being required to scale fully the application, as you would in a monolithic architecture

- improved productivity - since your developers can work in smaller increments of your services and independently test and deploy those increments

Together with all the benefits, comes the challenges as well, since by using this type of architecture, you automatically are increasing the complexity of your application, when compared with a monolithic application. Some of these challenges are the following:

- Fault Tolerance - the microservices application requires to be fault tolerant, so in case a service fails, the application is able to still work normally and recover from failure.

- Dependencies - sometimes depending on the complexity of your application, you are not able to fully abstract the services from each other, and you end-up with inter-dependencies between services, and consequently it may be challenging to manage changes and deployments to those services

- Tracing and Monitoring - since our application is composed by several services, we are required to use a distributed tracing method to be able to effectively trace your application. This might sound simple, but collecting the right traces in the right context will require considerable time and efforts.

- Communications Performance - As oppose to monolithic applications, whereas you use in-process calls, with microservices you are using remote calls to establish communications between your services, and these calls are slower. So, you need to think carefully about when and how you are using communications between your services.

- Service Discovery - As you can imagine, your application might be composed by several services, and since you need to establish communications between your services, then you need to ensure that each of your services is able to discover other services in order to establish communications. This may require considerable time and effort to manage.

There is an interesting article, published by NGINX, that shares some of the challenges that Netflix faced when started to adopt Microservices and re-designing their applications.

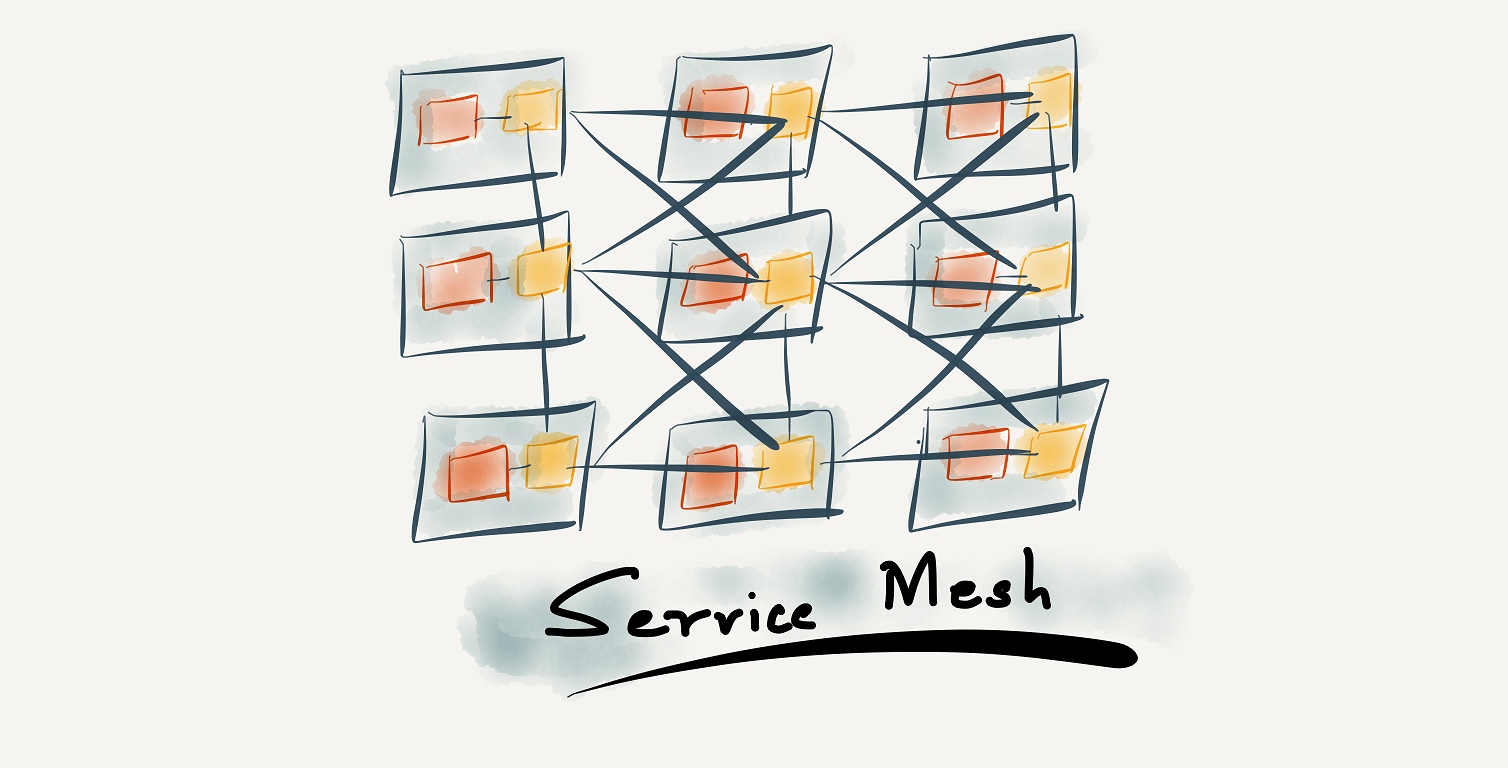

Service Mesh

Service Mesh is an infrastructure layer designed to handle a high volume of network-based inter-process communications among the services that compose your application. Also, Service Mesh provides you an extensive set of features that allows you to overcome some of the challenges, mentioned above, that you can face in microservices applications. The picture below shows the Service Mesh architecture, and as you can see it is composed by two planes, the control plane and the data plane. The data plane manages the communications between the services, by using instances of sidecar proxies, one instance per service. The control plane manages the configuration required to control the data plane’s behaviour.

Service Mesh provides several functions that delivers you the core features listed below. As i said below, some of these features allows you to easily overcome some of the challenges when adopting a Microservices architecture for your applications.

Demo

In order to show a practical example of this working in practice, I prepared a demo application with the following components:

- Kubernetes for Container Orchestration

- Minikube to run the Kubernetes cluster in my local machine

- Virtualbox to virtualize my environment and create a virtual machine to host the cluster

- Istio as Service Mesh solution

- Grafana for Tracing and Monitoring my Microservices application

- Kiali to manage the Service Mesh configuration

- Docker to manage the containers to deploy in the cluster

Step 1 - Install Docker

The first step is to install Docker in your local machine. You can follow the Install Guide) provided by Docker’s official website.

Step 2 - Install Minikube

The next step is to install Minikube in your local machine, so it will enable you to create your Kubernetes cluster. You can follow this Install Guide provided by Kubernetes official website.

Step 3 - Run Minikube

The next step is to run your Minikube. Be careful with the amount of memory and CPUs you allocate, otherwise you may face some issues when running your cluster in Minikube, as i did. To solve the issues, i end-up increasing memory and CPUs and the final configuration was the following:

minikube start --memory=16384 --cpus=4

Also once you have the Minikube running, you need to active the tunnelling so you can access your services outside from your cluster.

Step 4 - Install Istio

Istio has a really comprehensive article that explains you all the steps to install Istio in your Kubernetes cluster. Also, it provides you a demo app that allows you to easily create your Kubernetes cluster with Istio installed and start to use it. I would recommend you to use the auto inject option for the sidecar proxies, while configuring Istio in your cluster, so Istio will inject the sidecar proxy every time you deploy a new service, so you do not need to manage it by yourself.

Step 5 - Test Demo App

Consider the following steps to test your app

- Get from the terminal the external ip for your cluster (is showing in the terminal where you activated the minikube tunnel.)

- Validate demo app is running (e.g. http://192.168.99.101:31380/productpage)

- Validate Grafana service is running by running the following command

kubectl -n istio-system get svc grafana - Get Grafana’s service name

kubectl -n istio-system get pod -l app=grafana -o jsonpath='{.items[0].metadata.name}’ - Enable port forwarding for Grafana’s Service

kubectl -n istio-system port-forward grafana-nnnnnnnnn 3000:3000 - Enable port forwarding for Kiali’s service

kubectl -n istio-system port-forward kiali-nnnnnnnnnnnn 20001:20001 - Open Grafana’s dashboard (e.g. http://localhost:3000/?orgId=1)

- Open Kiali’s dashboard (e.g. http://localhost:20001/kiali/)

Note: Ports and domain can vary depending on the configurations of your local machine. Please run commands against your cluster using the kubectl to get the ports of the different services running in your cluster.

In this scenario we are running our application in our local environment for testing Service Mesh, but in case you want to build your applications and prepare them to Production environments, I would recommend you to use Azure Kubernetes Service (AKS), since this is a managed-service that gives you abstraction of the environment running your Kubernetes cluster and let’s you focus on your application. Also, it provides you features such as Elastic provisioning, that allows you to increase/decrease capacity of your cluster, Improved Productivity by leveraging the integrations with Visual Studio Code Kubernetes Tools, Azure Devops and Azure Monitor, Advanced Identity and Access Management by leveraging integration with Azure Active Directory and more.

Initially, it can look really complex to configure and use Service Mesh in your Microservices application, but once you start to use it, things will start to be clear, with time.

It is important to notice that Service Mesh can be an overkill solution for scenarios of small applications, since it requires significant time, effort and expertise to properly configure and manage it.

I also invite you to have a look to the presentation’s slides that I shared in my Github repo.