Building Intelligent Enterprise-Grade applications with Azure OpenAI and Microsoft Data Platform

Introduction

In the rapidly evolving landscape of artificial intelligence, certain innovations stand out, heralding new horizons for both technology and society. One such forefront organization is OpenAI, which has become synonymous with cutting-edge AI research and applications. Established with a mission to ensure that artificial general intelligence benefits all of humanity, OpenAI has been at the vanguard of a specific AI niche that’s been capturing imaginations worldwide: generative AI.

Generative AI refers to models and algorithms designed to produce content. Instead of just processing and analyzing input data, these models generate new data that wasn’t part of their training set. In this realm, one of the most revolutionary developments has been the advent of large language models. These are essentially vast neural networks trained on enormous amounts of text data, enabling them to generate human-like text based on the patterns they’ve learned.

OpenAI’s contributions, particularly its GPT (Generative Pre-trained Transformer) series, are exemplary of what large language models can achieve. These models can compose essays, answer questions, write code, create poetry, and even generate entirely new concepts, all while maintaining a coherence and nuance that’s often indistinguishable from human writing.

As we stand at this intersection of innovation, it’s crucial to understand the capabilities and potential implications of these technologies. They are not just tools for automation but are reshaping our understanding of machine intelligence and its role in the human experience.

And when it comes to adopt and use these models and Generate AI in your organization environments, Azure OpenAI is a great way you can leverage these large language models and Generate AI.

Azure OpenAI

Azure OpenAI stands as a beacon in this context, offering a tailored environment to deploy, manage, and harness these groundbreaking AI models.

Azure OpenAI not only provides the robust infrastructure necessary for the computational demands of LLMs but also places paramount importance on security, privacy, and control. In a world where data breaches and privacy concerns make headlines, leveraging LLMs through Azure ensures that businesses can maintain the confidentiality and integrity of their data.

Furthermore, Azure OpenAI’s seamless integration capabilities are a game-changer. Companies can effortlessly connect these advanced AI models with their existing applications and systems. This interoperability translates to enhanced user experiences, smarter application functionalities, and a competitive edge in today’s digital marketplace.

In essence, Azure OpenAI is the bridge that connects the promise of models like OpenAI’s LLMs with real-world applications, all while upholding the gold standards of security and adaptability.

And specially for existing and new Azure customers, this service is a great choice to use your data with these models, while keeping the data where it is, without the need to move it externally and taking risks of exposing your data.

Azure OpenAI offers connectivity to the suite of OpenAI models, encompassing GPT-3, Codex, and the embedding model series. These models cater to tasks such as content creation, summary generation, semantic searching, and converting natural language into code. Y You can engage with the service through the REST API, the Python SDK, or directly via the web interface in Azure OpenAI Studio.

Getting started with Azure OpenAI

The first step to get started with Azure OpenAI is to ensure you have access to the service on Azure. Currently the service is in public preview, so you need to request access to it. You can request access to the service by filling out the form on the Azure OpenAI page. Once you have access, you can create an Azure OpenAI resource in the Azure portal.

Once you create your Azure OpenAI resource, then you need to access the Azure OpenAI Studio. You can access the Azure OpenAI Studio by clicking on the OpenAI Studio button on the Azure OpenAI resource page in the Azure portal or by going to the Azure OpenAI Studio directly.

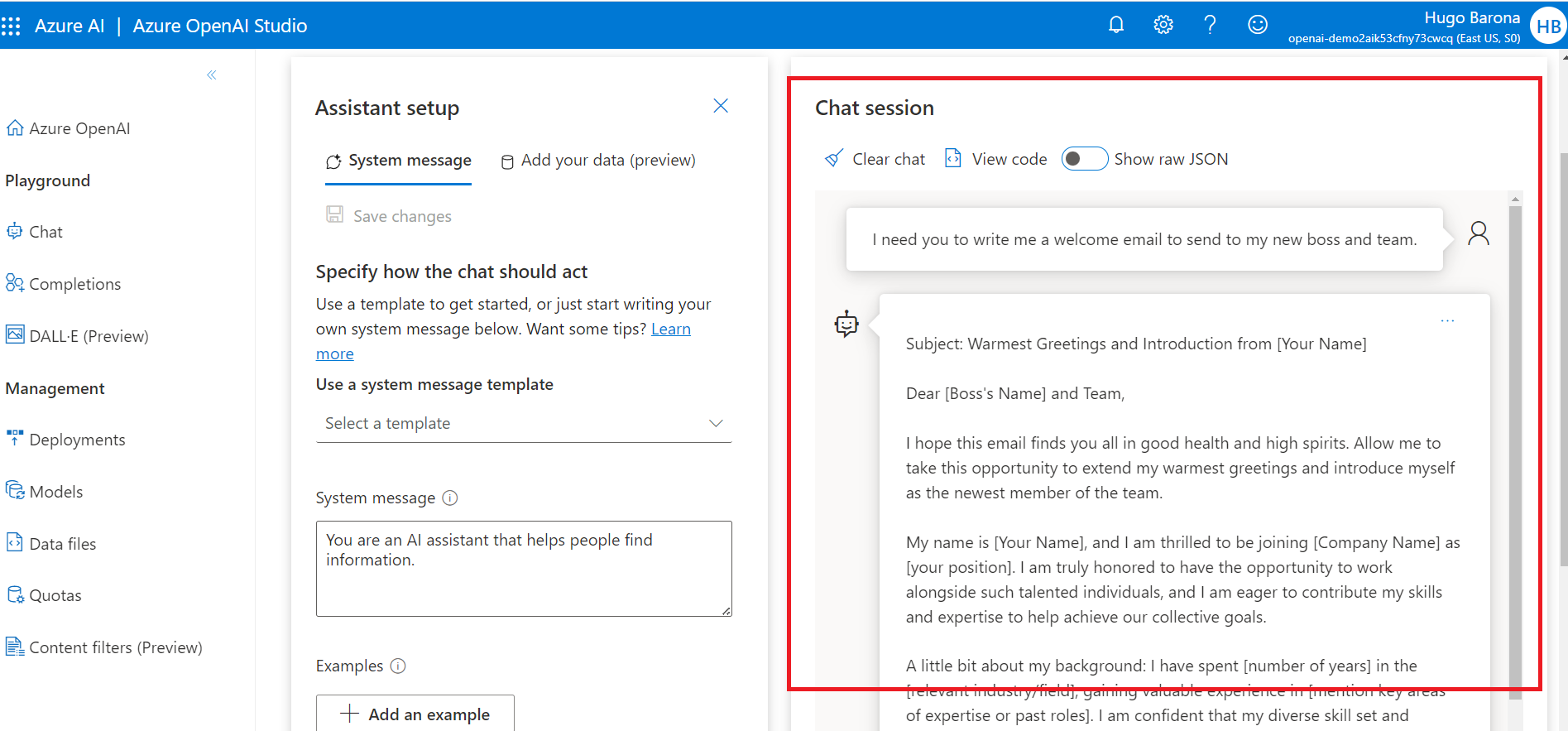

Once you access the Azure OpenAI Studio, you will be presented with the screen below. You have two main categories of options there - Playground and Management. The Playground option allows you to try the different models available, including GPT-3, GPT-4 for chat experience and DALL-E for image creation. You can also see the list of models available in the Azure OpenAI Studio by clicking on the Models option on the left navigation bar. The Playground is a great way to try out the models and see how they work, without the neeed to write any code. You can also see the code that is generated by the model, which is a great way to learn how to use the models in your own applications.

The Chat experience enables you to take a step further, and try out a chat experience, similar to ChatGPT, with the GPT-3 and GPT-4 models. But you can also add your data using different types of data sources, inclduing Azure Cognitive Search, Azure Blob Storage and your data files of different types, including txt, pdf, html and others. This is a great way to leverage your data with the models, without the need to implement processes to move your data.

Playground

As mentioned above, the playground is a great way to try out the models and see how they work, without the neeed to write any code. It is useful specially for those who are not familiar with the models and have no coding skills to build applications using these models. Instead, you can use the playground, try out the models, without writting any code, and showcase its capabilities to your team and stakeholders. As I said, you can also use your own data and try out the models on the playground.

And in case you want to work with images, you can also use the playground to try out the DALL-E model, which is a great way to generate images based on text.

Customize your models

You can also prepare your datasets and use the studio, the REST API or even Python to fine-tune your models using your data, and then use the models in your applications. This is a great way to leverage your data and build models and applications that are tailored to your needs.

To create your custom model, you always start from a base model, which is the model you want to use as a base for your custom model. You can use some of the base models available on Azure OpenAI, including ada, babbage and curie. More information about the base models can be found here.

Integrate Azure OpenAI with your applications and ecosystem

There are different ways you can integrate your Azure OpenAI models with your applications and ecosystem. Depending on your scenario, you may want to use the REST API, the Python SDK or even the Azure OpenAI Studio.

In this section I want to share with you some use cases and scenarios where you can leverage Azure OpenAI and integrate it with your applications and ecosystem, by focusing on the architecture and the data handling.

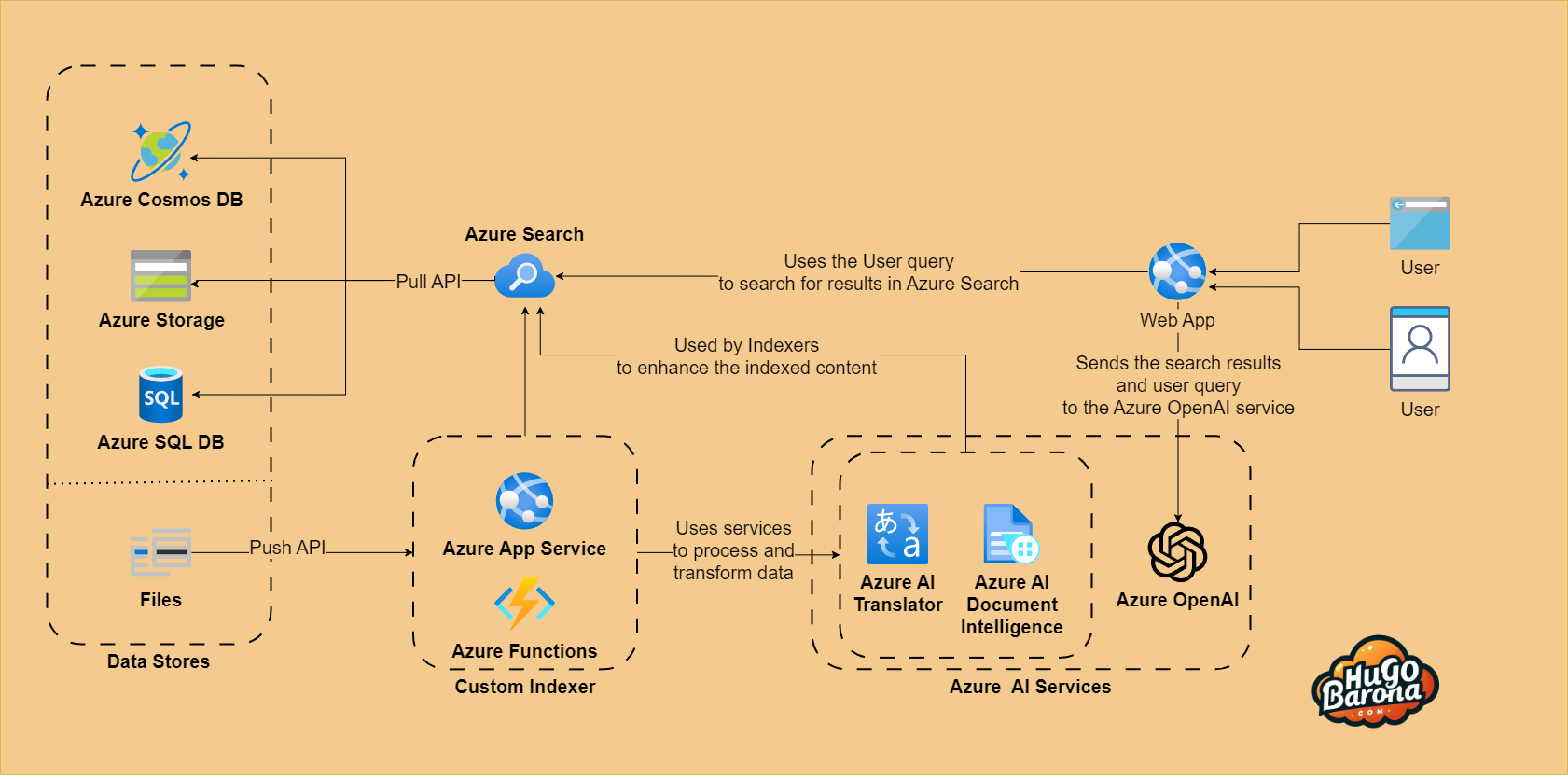

The architecture diagram below presents a scenario of using Azure OpenAI service and integrating it with your existing web applications to enable your end-users to query your custom data and retrieve intelligent responses and insights from it. It contains a workflow integrating Azure data storage services, Azure Search, Azure AI services, and Azure OpenAI.

Azure OpenAI Sample Architecture Diagram

Azure OpenAI Sample Architecture Diagram

Diagram services

- Data stores

- Azure Cosmos DB: A globally distributed database for various applications.

- Azure Storage: Offers cloud storage solutions like Azure Blob and Azure Files.

- Azure SQL DB: A fully managed relational database service.

- Files: Represents either traditional file storage systems or Azure Files.

- Azure App Service: A platform for building, deploying, and scaling web apps.

- Azure Functions: A serverless compute service that lets you run event-driven code without having to manage infrastructure.

- Azure AI Services

- Azure AI Translator: Offers real-time text translation.

- Azure AI Document Intelligence: Could be used to extract, process, or categorize content from documents.

- Azure OpenAI: Represents an integration of OpenAI’s capabilities and models within the Azure ecosystem.

- Azure Cognitive Search: Azure Search provides a cloud search service for you to incorporate its search capabilities into your applications.

Data Indexing

There are two common ways to populate your Azure Search indexes with your data or external data, including the push and pull methods. The push method requires you to prepare an application to push the data programatically. This method is typically used when you store your data in a data store not supported by Azure Search. The pull method leverages a built-in feature called indexers from Azure Search. Using this method, you don’t need to code. You only need to configure the indexer to pull data from your data store and store it in your Azure Search index. This method is typically used when you store your data in a data store supported by Azure Search, such as Azure Blob Storage, Azure Cosmos DB, Azure SQL DB, Azure Table Storage, Azure Files, and others.

Data Transformation / Enhancements

In terms of enhancing and transforming your data, in both indexing methods, you can enhance and transform your data using Azure AI services. For example, you can use Azure AI Translator to translate your data to different languages and Azure AI Document Intelligence to extract information from your documents and store that information in your Azure Search indexes. On the push method, you need to write some code to use the Azure AI services’ REST APIs to use the different AI services available. On the pull method, you can use the Skillsets feature from Azure Search to use the different AI services available while indexing your data.

Azure Search

By using Azure Search, you can apply the Retrieval Augmentation Generation (RAG) architecture. The RAG architecture enhances the functionalities of a Large Language Model (LLM), such as ChatGPT, by integrating an information retrieval mechanism that allows for greater control over the data that the LLM utilizes when crafting its replies. By applying this, you can control the data your end-users access and use. In the context of an organization, using the RAG approach ensures that the natural language processing is tailored to the enterprise’s content, which may come from vectorized content like documents, images, audio, and video.

Summary of the architecture

In summary, this architecture showcases an integrated search and response system. A user’s query fetches results from Azure Search, which indexes data from multiple Azure storage solutions. This data might undergo transformations or translations using Azure AI services. The refined data is then processed by Azure OpenAI to provide intelligent responses or insights. The entire workflow facilitates quick, accurate, and context-aware interactions for end-users.

Conclusion

This is just a simple example on how you can integrate Azure OpenAI with your applications and ecosystem, with minimal impact on your existing architecture and data handling. By doing this, you can adopt Generative AI and provide new capabilities to your existing applications, and you can also use Azure OpenAI to build new applications and solutions, leveraging the different models available, including GPT-3, GPT-4 and DALL-E.

If you want to explore more examples and use cases, you can check out the Azure Architecture Center.